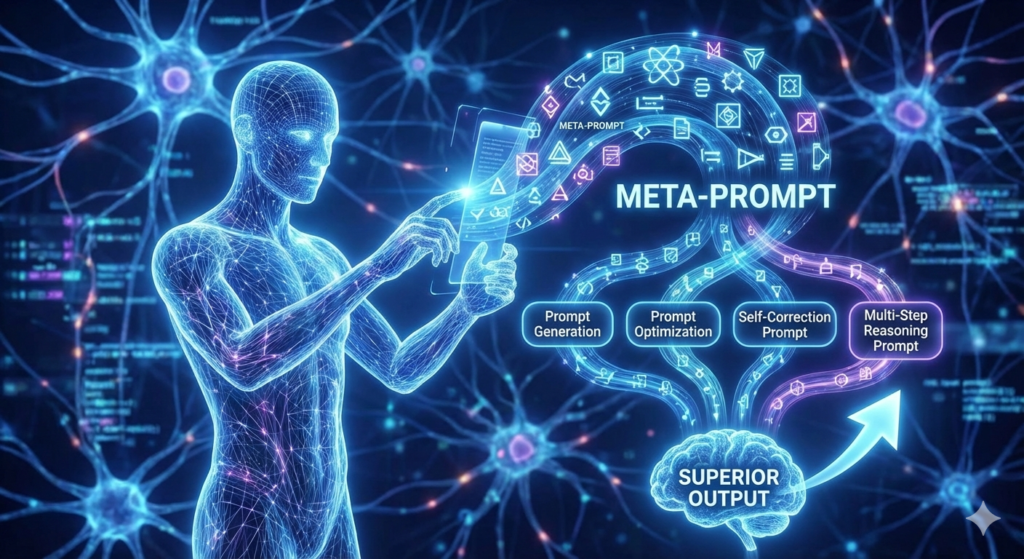

Meta-prompting is rapidly gaining traction as a transformative technique in the field of artificial intelligence, particularly in the realm of prompt engineering. As AI systems become more sophisticated, the need for improved interaction paradigms is paramount. Meta-prompting emerges as a promising solution, offering a new way to enhance AI model performance, contextual understanding, and overall interaction quality. This blog post delves into the strategic insights surrounding meta-prompting and its implications for AI development and application.

Meta-Prompting as a Transformative Technique in AI

Meta-prompting represents a significant shift in how AI systems are guided to produce outputs. Traditional prompting involves providing an AI model with a direct instruction or question, which it then uses to generate a response. In contrast, meta-prompting employs higher-order prompts that serve as a guide for the AI to interpret and respond to initial prompts more effectively.

Meta-prompting introduces an additional layer of prompts that instruct the AI on how to handle the initial input. This approach can lead to enhanced accuracy and contextual relevance, as it allows AI models to better understand the nuances and complexities of the input data. By refining how AI systems interpret prompts, meta-prompting can result in more sophisticated and human-like communication.

Consider a scenario where a user asks an AI model, “What are the health benefits of green tea?” Using meta-prompting, an additional prompt might guide the AI to consider recent scientific studies, historical context, and potential side effects, resulting in a more comprehensive and well-rounded response. This layered approach can significantly improve the quality of information provided by AI systems.

Improved AI Model Performance and Efficiency

The integration of meta-prompting techniques has shown to enhance AI model performance, particularly in tasks involving natural language processing (NLP). By directing how models should interpret and respond to prompts, meta-prompting can lead to better task-specific outcomes.

Meta-prompting enables AI models to generate text that is more coherent and relevant to the user’s query. This improvement is crucial for applications such as chatbots, virtual assistants, and automated content generation, where the quality of interaction is paramount. By improving performance and efficiency, meta-prompting positions itself as a critical component in the future development of AI systems.

By reducing the cognitive load on AI models through structured guidance, meta-prompting can also improve operational efficiency. Models can process information more swiftly and accurately, leading to faster response times and reduced computational overhead.

Enhanced Contextual Understanding and Language Comprehension

One of the key advantages of meta-prompting is its ability to enhance the contextual understanding and language comprehension of AI systems. By employing layered prompts, AI models can achieve a deeper comprehension of complex language constructs.

Meta-prompting allows AI to navigate intricate linguistic tasks that require nuanced understanding. For instance, when interpreting a piece of literature or analyzing conversational context, meta-prompts can guide the AI to consider various perspectives, themes, and emotional tones, leading to richer and more insightful outputs.

In fields such as legal analysis, medical diagnostics, and customer service, the ability to comprehend complex language is essential. Meta-prompting enhances AI’s capability to handle these tasks, improving accuracy and reliability.

Framework for AI Model Training and Development

Meta-prompting offers a novel framework for enhancing AI model training and development processes. By integrating meta-prompts into the training regimen, AI models can be trained more effectively.

The use of meta-prompts allows AI models to learn how to generate high-quality outputs more rapidly. This approach accelerates the training process, thereby reducing development time and costs. Additionally, it improves the adaptability and precision of AI outputs, resulting in solutions that better meet user needs.

By adopting meta-prompting in model training, developers can explore new avenues for AI capabilities. This method encourages innovation in prompt engineering and offers the potential to develop AI systems that are more responsive and intuitive.

Strategic Implications for AI Interaction Paradigms

The rise of meta-prompting has strategic implications for AI interaction paradigms, potentially transforming how AI systems engage with users and other systems.

Meta-prompting enhances communication capabilities by refining AI model responses. This refinement leads to interactions that are more intuitive and seamless, thereby improving user experiences.

As AI systems become more adept at interacting with users through meta-prompting, the adoption and integration of AI technologies across various domains are likely to increase. Industries such as healthcare, finance, and education stand to benefit from more sophisticated AI interactions.

Conclusion

Meta-prompting is set to redefine the landscape of AI interactions, offering substantial improvements in performance, contextual understanding, and model training. By leveraging higher-order prompts, AI systems can achieve greater accuracy and relevance in their outputs, ultimately enhancing user experiences and driving broader adoption of AI technologies. As the field of prompt engineering continues to evolve, meta-prompting will undoubtedly play a pivotal role in shaping the future of AI communication.