Introduction

In the rapidly evolving field of artificial intelligence, zero-shot prompting has emerged as a groundbreaking technique, enabling language models to perform tasks they have not explicitly been trained on. This is achieved by crafting prompts that guide pre-trained models to generate relevant outputs without the need for additional training data specific to the task. The significance of zero-shot prompting lies in its potential to save resources and time, making it a valuable tool for developers and researchers alike. In this article, we will explore the strategic insights related to zero-shot prompting, focusing on prompt design, integration of contextual information, challenges, multilingual applications, and the vulnerability to adversarial attacks.

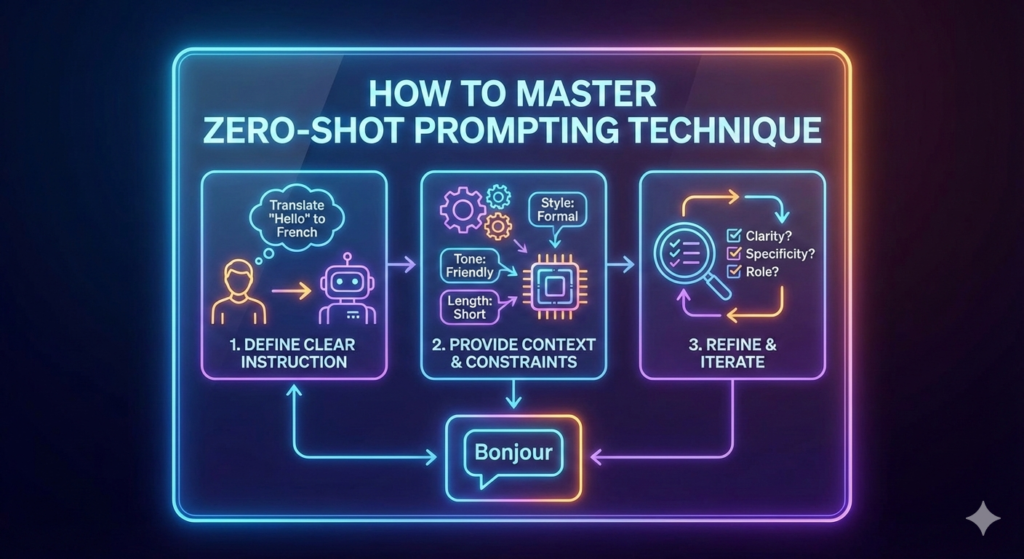

Importance of Prompt Design in Zero-Shot Learning

Prompt design is crucial in zero-shot learning, as it directly impacts the performance of language models in tasks such as text classification. Effective prompts can enhance model accuracy by aligning closely with task requirements. Prompt engineering is not just a supplementary tool but a fundamental aspect of zero-shot text classification. Crafting prompts that resonate with the context and semantics of the task can lead to significant improvements in performance. This necessitates the development of guidelines and best practices for prompt design, ensuring that prompts are both intuitive and effective.

Example of Effective Prompt Design

Consider a sentiment analysis task where the model needs to determine the sentiment of a given sentence. An effective prompt might be: “Is the sentiment of this sentence positive, negative, or neutral? ‘I love the new features of this product.'” This prompt explicitly guides the model to classify the sentiment, leveraging the pre-trained language model’s understanding of sentiment-related language.

Integration of Contextual Information

Incorporating contextual information into prompts can significantly improve zero-shot learning outcomes. By utilizing external knowledge sources, prompts can be contextualized to enhance model performance. For instance, research demonstrates that contextualized prompts can bridge the gap between zero-shot and few-shot learning, reducing the reliance on large labeled datasets. This approach not only improves accuracy but also allows models to adapt to various domains and applications without extensive retraining.

Example of Contextualized Prompts

In a document classification task, a contextualized prompt might be: “Based on the following historical context, classify this document: ‘The industrial revolution marked a pivotal turning point in history.’ Is this document about economics, history, or technology?” By providing background information, the model can make more informed predictions.

Challenges and Limitations of Zero-Shot Prompting

Despite its advantages, zero-shot prompting faces several challenges and limitations, particularly in tasks that require complex reasoning or domain-specific knowledge. As noted, identifying these boundaries is crucial for setting realistic expectations. Models may struggle with intricate logical reasoning or when specialized knowledge is necessary for accurate predictions. To mitigate these challenges, enhancing model robustness against adversarial attacks and developing strategies to improve reasoning capabilities are essential.

Addressing Complex Tasks

For complex tasks, combining zero-shot prompting with few-shot learning techniques or leveraging domain-specific knowledge bases can help overcome limitations. This hybrid approach allows models to access additional information when needed, improving accuracy and reliability.

Multilingual and Cross-Lingual Applications

Zero-shot prompting techniques hold significant promise in multilingual text understanding. By translating and adapting prompts, language models can perform tasks across different linguistic contexts without the need for language-specific training data. Research highlights the importance of prompt translation and adaptation in developing language-agnostic models. This capability not only expands the applicability of language models but also supports the creation of more inclusive AI systems that cater to diverse linguistic audiences.

Example of Multilingual Prompting

For a cross-lingual sentiment analysis task, a prompt could be adapted as follows: “¿Es el sentimiento de esta oración positivo, negativo o neutral? ‘Me encanta las nuevas características de este producto.'” This adaptation allows the model to process and understand sentiment in Spanish, demonstrating the flexibility of zero-shot prompting.

Vulnerability to Adversarial Attacks

One of the significant challenges of zero-shot prompting is its vulnerability to adversarial attacks. These attacks can manipulate prompts or inputs to produce inaccurate or misleading outputs, posing a threat to the reliability of AI systems. Researchers emphasize the need for developing robust prompt designs and defense mechanisms to enhance resilience against such attacks. Ensuring the integrity and trustworthiness of AI systems utilizing zero-shot prompting is critical for their successful deployment in real-world applications.

Strategies for Enhancing Security

To defend against adversarial attacks, researchers can implement strategies such as input validation, adversarial training, and the use of robust prompting techniques. These measures can help safeguard models from manipulation, maintaining their accuracy and reliability.

Conclusion

Zero-shot prompting represents a transformative approach in the field of AI, offering significant advantages in terms of efficiency and adaptability. By focusing on effective prompt design, integrating contextual information, addressing challenges, and exploring multilingual applications, researchers and developers can harness the full potential of zero-shot prompting. However, it is crucial to recognize and mitigate the vulnerabilities to adversarial attacks to ensure the reliability and security of AI systems. As the field continues to evolve, zero-shot prompting will likely play an increasingly pivotal role in the development of versatile and robust language models.